Hi fellow Clinical Informaticists, workflow designers, and other clinical architects,

Today's blog post is a slight deviation from my usual posts - It's actually a guest post, from a smart young college student, Paul Lestz, who I recently had the good fortune of working with on an educational internship.

Paul's particular interest is related to the use of Artificial Intelligence (A.I.), so we discussed the current state of A.I. in healthcare, and ways to implement this technology to a broader audience.

So I'm very happy to report that, after reading Paul's blog post below, that 'The Kids Are Alright' - If this is what our future leadership looks like, then I have great confidence in our future.

Please enjoy Paul's post below :

______________________________

Currently, there are few industry-wide reasons to be concerned - at least so far. While some healthcare institutions have begun the deployment of A.I. systems, we are not yet dependent on them for these types of high-risk decisions. Human doctors still have responsibility and remain in control - which means now is a good time to educate ourselves on A.I., including its many compelling benefits, potential risks, and ways to mitigate those risks.

While reading, please remember - A.I. is a complicated topic, that warrants our attention. Turning a 'blind eye' to A.I. does not mean that the field will not continue to expand into every industry, including healthcare. I hope this post provides some helpful education - as a starting point for future discussions - and helps to reduce the initial intimidation that A.I. discussions often induce.

Why do I believe that A.I. will continue to expand into the healthcare industry? It's because of the many potential benefits of using A.I. to manage the high-risk scenarios that healthcare workers commonly encounter. Among others, here are some major benefits offered by A.I.:

Adapted from: Artificial Intelligence in Medicine | Machine Learning | IBM

Cutting through the noise - A.I. can help make sense of the overwhelming amount of clinical data, medical literature, and population and utilization data to inform decisions.

Providing contextual relevance - A.I. can help empower healthcare providers to see expansively by quickly interpreting billions of data points - both text and image data - to identify contextually relevant information for individual patients.

Reducing errors related to human fatigue - Human error is costly and human fatigue can cause errors. A.I. algorithms don’t suffer from fatigue, distractions, or moods. They can process vast amounts of data with incredible speed and accuracy, all of the time.

Identifying diseases more readily - A.I. systems can be used to quickly spot anomalies in medical images (e.g. CT scans and MRIs).

From my perspective as a student, these are all compelling examples of how A.I. could help develop healthcare into a more modern, efficient, and reliably data-driven patient-care system.

To do this, however, also requires an examination of the challenges that A.I. can bring with it - unsurprisingly, extremely new technology sometimes brings unexpected issues. Some of the known challenges of A.I. implementation include:

Adapted from: The Dangers of A.I. in the Healthcare Industry [Report] (thomasnet.com)

Distributional shift - A mismatch in data due to a change of environment or circumstance can result in erroneous predictions. For example, over time, disease patterns can change, leading to a disparity between training and operational data.

Insensitivity to impact - A.I. doesn’t yet have the ability to take into account false negatives or false positives.

Black box decision-making - With A.I., predictions are not open to inspection or interpretation. For example, a problem with training data could produce an inaccurate X-ray analysis that the A.I. system cannot factor in, and that clinicians cannot analyze.

Unsafe failure mode - Unlike a human doctor, an A.I. system can diagnose patients without having confidence in its prediction, especially when working with insufficient information.

Automation complacency - Clinicians may start to trust A.I. tools implicitly, assuming all predictions are correct and failing to cross-check or consider alternatives.

Reinforcement of outmoded practice - A.I. can’t adapt when developments or changes in medical policy are implemented, as these systems are trained using historical data.

Self-fulfilling prediction - An A.I. machine trained to detect a certain illness may lean toward the outcome it is designed to detect.

Negative side effects - A.I. systems may suggest a treatment but fail to consider any potential unintended consequences.

Reward hacking - Proxies for intended goals sometimes serve as 'rewards' for A.I., and these clever machines are able to find hacks or loopholes in order to receive unearned rewards, without actually fulfilling the intended goal.

Unsafe exploration - In order to learn new strategies or get the outcome it is searching for, an A.I. system may start to test boundaries in an unsafe way.

Unscalable oversight - Because A.I. systems are capable of carrying out countless jobs and activities, including multitasking, monitoring such a machine can be extremely challenging.

Unrepresentative training data - A dataset lacking in sufficient demographic diversity may lead to unexpected, incorrect diagnoses from an A.I. system.

Lack of understanding of human values and emotions - A.I. systems lack the complexity to both feel emotions (e.g. empathy) and understand intangible virtues (e.g. honor), which could lead to decisions that humans would consider immoral or inhumane.

Lack of accountability for mistakes - Because A.I. systems cannot feel pain and have no ability to compensate monetarily or emotionally for their decisions, there is no way to hold them accountable for errors. Blame is therefore redirected onto the many people related to the incident, with no one person ever truly held liable.

Rather than feel discouraged when comparing the benefits of A.I. versus these risks above, I'd like to share that there are solutions to many, if not all, of these known risks above - through commitment and detailed policy work.

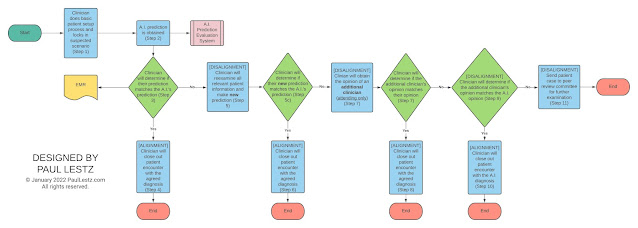

For instance, let’s take a look at the challenge underlined above: automation complacency. At first glance, one might think it would be too difficult to resolve this extremely conceptual issue, intrinsic to the mind of the clinician. However, automation complacency serves little to no problem if the following workflow is implemented:

(Sample policy/workflow for managing automation complacency - Click to enlarge)

I designed this visual to help simplify the complex process of reducing automation complacency to a few, easy-to-follow steps.

Resolving the issues related to A.I. does not mean instantly coming up with a single, lengthy procedure in the hopes that it will work. Instead, resolving challenges means breaking the problem down into pieces and isolating different steps in order to achieve the desired result.

When developing the flow chart above, I had to determine what exactly was the root of the unwanted issue:

Q: How could a clinician be biased towards picking the A.I. algorithm’s result without considering alternatives?

A: It would most likely be because they knew the A.I.’s prediction before/at the time they made their initial diagnosis.

While we, as humans, might think that we are not biased by certain information, this assumption is often an illusion. Subconscious biases tend to be the most powerful because we do not realize how much they affect us.

In order to solve this problem, my workflow above mandates that the clinician provide and lock in their initial opinion before being provided the A.I. algorithm’s prediction. By doing so, we resolve our first issue of initial, subconscious biases.

______________________________

As I have just demonstrated, solving A.I.-related issues is often a matter of breaking down problems and coming up with small solutions that together, sum up to a working whole.

So, if there are often ways to mitigate the risks of these A.I.-related issues - are we good to go? The answer: it’s complicated.

Often, users (e.g. healthcare institutions) are not actually making their own algorithms. Instead, they purchase them. Therefore, one must consider various factors in deciding which A.I. algorithms to purchase. Unfortunately, after an extensive literature search, it doesn't appear that there has been a helpful, cohesive guide as to what factors to consider when purchasing A.I. solutions, so I would like to propose the following guidelines:

(Sample questions to consider in A.I. purchasing - Click to enlarge)

I created the infographic above to help frame some helpful questions to ask a vendor when considering the purchase of an A.I. solution.

______________________________

Generally, I hope that this piece helps to serve two primary purposes:

The first is to convince you that, with good understanding and planning - A.I. typically brings about more good than harm in the world.

(This second purpose assumes that you have already embraced the first) - The second purpose is to convince you not to take A.I. for granted, but to be thoughtful in the approach so that institutions (and the people who work at them) solve problems, purchase algorithms, and engage with the world of A.I. responsibly.

It's generally important to prepare and 'do your homework' before engaging in A.I. discussions. This preparation is especially important if we want to maximize the benefits of A.I. and minimize the risks. This post’s goal, therefore, is to bring the focal point of A.I. not to its use, but to its purchase. After all, a well-considered purchase combined with a thoughtful implementation often leads to more responsible ownership and successful outcomes. Alternatively, inadequate preparation can lead to unexpected outcomes.

______________________________

As a student, and without a deeper knowledge of the exact workflow expectations for a particular circumstance, I am unfortunately unable to offer any more-detailed perspectives. However, I hope this initial post helps to 'get the ball rolling' on some important discussions related to proper A.I. planning, purchasing, and use. The right answers will still need to be evaluated and defined by planners, users, regulatory agencies, and society.

______________________________

Remember this blog is for educational and discussion purposes only - Your mileage may vary. Have any thoughts or feedback to share about A.I. in Healthcare? Feel free to leave in the comments section below!